In an era dominated by technological advancement, the rapid evolution of Artificial Intelligence (AI) is both a marvel and a challenge. From self-driving cars that promise safer roads to AI-driven healthcare improvements that can diagnose diseases more accurately, the potential benefits of AI are monumental. However, with great power comes great responsibility. As AI continues to evolve, the ethical implications of its use need to be carefully considered. This article explores the complexity of AI ethics and emphasizes the importance of balancing innovation with responsibility.

The Promise of AI

AI technology has woven itself into the fabric of our lives in ways that were once the realm of science fiction. Machine learning algorithms analyze vast datasets to uncover patterns and insights that humans may overlook. Natural language processing enables real-time translations and provides assistance through conversational agents. In sectors like finance, AI optimizes trading strategies, while in agriculture, it enhances productivity through precision farming techniques.

The innovations brought forth by AI are not just limited to efficiency and economic growth; they also hold the potential for social good. For instance, AI systems can aid in disaster response, enhance educational tools for personalized learning, and provide critical insights for climate change research. With these transformative potentials, the drive for innovation is strong, leading to unprecedented investments and development in this field.

The Ethical Quagmire

However, as we dive deeper into the sea of AI innovation, we must also navigate its ethical challenges. At the forefront of these concerns are issues related to bias, privacy, accountability, and transparency.

Bias and Fairness

One significant concern is the inherent bias present in AI systems. These biases often stem from the data used to train machine learning algorithms. If the training data is skewed or unrepresentative, the algorithms can perpetuate and even exacerbate existing inequalities. For example, facial recognition technology has been shown to perform less accurately on individuals with darker skin tones, raising questions about fairness and discrimination. The challenge lies in ensuring that AI systems are designed and trained in a way that safeguards against bias and promotes equity.

Privacy and Data Protection

As AI systems rely heavily on data, the ethical use of this data is a pressing concern. The collection, storage, and utilization of personal information raise questions about consent and privacy. How can organizations ensure that they respect user privacy while still harnessing the power of AI? Striking a balance between innovation and the protection of personal data is critical to maintaining public trust.

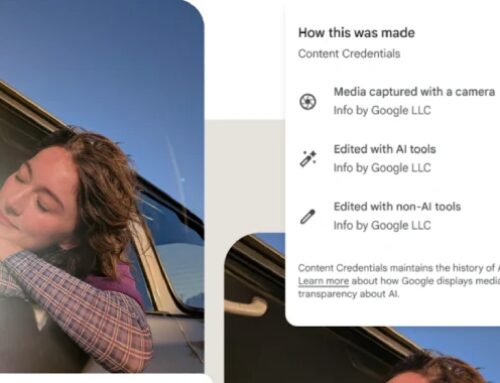

Accountability and Transparency

In the case of AI decision-making, determining accountability can be complex. If an AI system makes a mistake—whether in judicial sentencing, loan approval, or medical diagnosis—who is responsible? Is it the developer, the data scientist, the organization that deployed the AI, or the AI itself? Moreover, the "black box" nature of many AI models poses a transparency challenge. When algorithms operate in ways that are opaque or difficult to interpret, stakeholders (from end-users to regulators) may struggle to understand how decisions are made.

Toward Responsible Innovation

To embark on a journey of responsible AI innovation, a multi-faceted approach is necessary. Here are several strategies that can be employed to balance innovation with ethical constraints.

1. Establishing Ethical Guidelines

Organizations should develop comprehensive ethical guidelines tailored to their specific AI applications. These guidelines should address issues such as bias mitigation, data privacy, and accountability. Establishing ethical frameworks can guide decision-making and foster responsible AI practices.

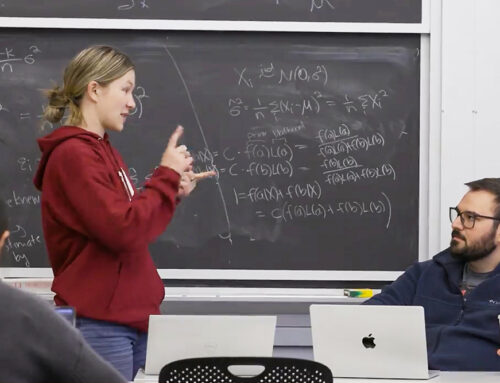

2. Diverse Development Teams

Creating diverse development teams can significantly reduce the risk of bias in AI systems. By incorporating varied perspectives and experiences, organizations can better understand and mitigate the potential impacts of their technologies on different communities.

3. Engaging Stakeholders

Involving stakeholders—including those who might be affected by AI technologies—in the design and deployment processes ensures that diverse viewpoints are considered. Engaging the public in dialogue around AI ethics can cultivate a more inclusive approach to innovation.

4. Regulatory Oversight

While excessive regulation can stifle innovation, a balanced approach to regulation is essential. Governments and regulatory bodies should work to establish frameworks that promote responsible AI development while encouraging innovation. Simultaneously, regulators should prioritize adaptability, ensuring that frameworks evolve alongside technological advances.

5. Continuous Monitoring and Evaluation

AI systems should not be "set and forget." Continuous monitoring and evaluation can help identify and rectify issues such as bias or unforeseen consequences. Feedback loops and audits are critical for maintaining accountability and transparency over time.

Conclusion

As society stands on the brink of an AI-driven future, the ethical considerations surrounding this transformative technology are paramount. Balancing innovation with responsibility is not just a moral obligation; it is essential for fostering public trust and ensuring that the benefits of AI are enjoyed broadly and equitably. By prioritizing ethical principles and collaborative efforts, we can harness the power of AI to enrich lives while navigating its challenges with responsibility and care. The journey ahead is as exciting as it is complex, and it is one that we must embark on together, with caution and foresight.

![[Webinar] Shadow AI Agents Multiply Fast — Learn How to Detect and Control Them](https://secopsnextgen.com/wp-content/uploads/2025/09/webinar-500x383.jpg)

Deixe o seu comentário