In the digital age, the increasing reliance on artificial intelligence (AI) algorithms to automate decision-making processes across various sectors raises significant ethical concerns. From hiring practices to criminal justice, the implications of algorithmic decisions can deeply influence lives and livelihoods. As AI systems become more complex and integrated into daily life, the push for ethical AI—specifically the creation of fairness in algorithmic decision-making—has never been more critical.

Understanding Algorithmic Bias

At its core, algorithmic bias occurs when an AI system produces outcomes that are prejudiced due to flawed data input or design. Historical biases can seep into algorithms through unrepresentative datasets, wherein certain demographic groups are overrepresented or underrepresented. For example, if a predictive policing algorithm is trained primarily on data reflecting past arrests, it may unfairly target specific communities based on historical law enforcement practices rather than actual criminal behavior.

Moreover, bias can also manifest unintentionally through seemingly neutral criteria that may disproportionately disadvantage certain groups. A classic example is the hiring algorithm trained on data that reflects past employee demographics. If past hiring practices were biased against certain groups, the AI may perpetuate those biases, further entrenching existing inequalities.

The Importance of Fairness in AI

Fairness in algorithmic decision-making is paramount for several reasons:

-

Social Justice: Unbiased algorithms promote equal opportunity and support social justice, ensuring that people are not unfairly deprived of rights or resources based on race, gender, socioeconomic status, or any other characteristic.

-

Trust and Transparency: As AI usage grows, so does public scrutiny. Fair and transparent algorithms foster trust among users, allowing individuals to feel secure that their data is treated ethically.

-

Business Sustainability: Companies that prioritize ethical AI practices can bolster their reputations, ultimately leading to consumer loyalty, competitive advantage, and long-term success.

- Legal Compliance: As regulations surrounding AI continue to evolve, organizations must comply with legal standards to mitigate risks associated with discrimination and bias in decision-making.

Strategies for Creating Fairness

To establish fairness in algorithmic decision-making, organizations and stakeholders must adopt several strategies:

1. Diverse and Representative Data

The foundation of fair algorithmic systems lies in the datasets they are trained on. Ensuring that training data is diverse and representative of the population will help mitigate bias. This can involve collecting additional data points to balance datasets or using synthetic data to fill gaps. Moreover, continuous monitoring of data is crucial, as societal views and values can evolve.

2. Algorithmic Audits

Regular audits of AI algorithms can identify and address biases post-deployment. These audits should assess not only the algorithm’s accuracy but also its fairness across various demographic groups. Organizations can employ fairness metrics and benchmarks to ensure their algorithms do not disproportionately disadvantage any group.

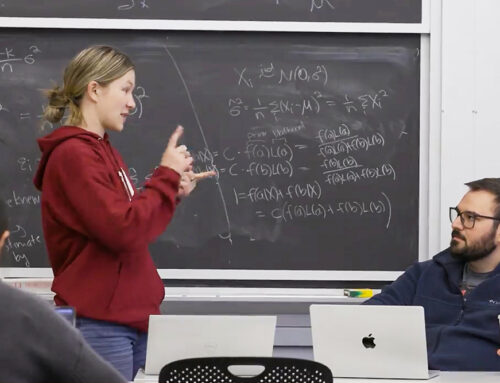

3. Inclusive Development Teams

Diverse development teams that include individuals from various backgrounds can better identify potential blind spots in the design and implementation phase. The interdisciplinary approach—comprising ethicists, data scientists, sociologists, and community representatives—can ensure that the needs and considerations of all stakeholders are taken into account.

4. Ethical Guidelines and Frameworks

Establishing clear ethical guidelines and frameworks for AI development is critical. These frameworks should outline best practices, compliance with legal standards, and methods for mitigating bias. They can serve as a roadmap for developers, ensuring that ethical considerations are integrated at every stage of the AI lifecycle.

5. Engaging with Affected Communities

Incorporating feedback from affected communities can provide valuable insights into the potential impact of algorithms. Engaging with these communities fosters trust and opens dialogue about real-world implications, allowing for a more nuanced understanding of fairness.

6. Transparency and Accountability

Transparency in algorithmic decision-making processes is crucial for accountability. Organizations should disclose how AI algorithms are built, the data that informs them, and the potential risks involved. This not only enhances trust but also empowers users to understand how decisions that affect them are made.

Conclusion

The challenge of creating fairness in algorithmic decision-making is multifaceted, requiring a concerted effort from various stakeholders, including technologists, policymakers, and communities. As AI continues to permeate our lives, implementing ethical AI practices becomes imperative. By prioritizing fairness, transparency, and accountability, we can harness the power of AI to promote social good and create systems that empower rather than discriminate. Ethical AI is not merely a technological challenge—it is a moral imperative for our increasingly interconnected world.

Deixe o seu comentário