Human Oversight vs. Automation: Finding the Right Balance in AI Security

As artificial intelligence (AI) continues to evolve and permeate various sectors, the conversation surrounding its implementation, particularly in the realm of security, has become increasingly crucial. The benefits of automation are undeniable; systems can process vast amounts of data, identify patterns, and respond to threats far more quickly than humans can. However, this efficiency raises important questions about oversight: How do we integrate human judgment in an era dominated by machines? Finding the right balance between human oversight and automation is essential for creating a robust security framework in AI applications.

The Case for Automation in AI Security

Automation in AI security has several compelling advantages. First and foremost is speed. Automated systems can analyze threats in real-time, detect anomalies, and initiate responses within milliseconds. This rapid response capability is vital in a landscape where cyber threats are increasingly sophisticated, evolving, and relentless.

Furthermore, automation reduces the margin for human error—an essential consideration when even a minor oversight can lead to significant security breaches. Algorithms can sift through massive amounts of data, identifying and mitigating threats that would be impossible for humans to address efficiently. In this context, automation provides a level of consistency and reliability that can significantly enhance an organization’s security posture.

Additionally, as organizations expand their digital footprints, the scale of potential cyber threats increases. Automation allows businesses to monitor systems continuously, mitigating risks that would otherwise overwhelm a human security team.

The Need for Human Oversight

Despite the myriad advantages that automation offers, it is essential to remember that AI systems, regardless of their sophistication, are inherently limited. AI algorithms are trained on existing data and can reflect the biases and errors inherent in that data. They can misinterpret contextual nuances, leading to false positives or negatives that a human might easily catch. Furthermore, automated systems lack the ethical considerations and understanding of human-centric contexts that often underpin intricate security decisions.

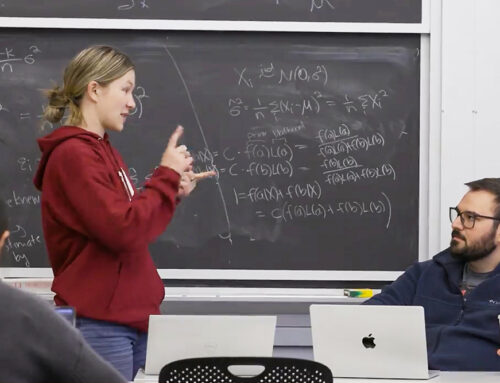

Human oversight is crucial for providing the nuance that machines often miss. Security professionals can contextualize anomalies, understand intricate patterns of behavior, and apply ethical reasoning in ways that automated systems cannot. In high-stakes scenarios—such as those involving national security or critical infrastructure—human intervention can prove invaluable, allowing for informed decision-making amidst complex or ambiguous situations.

Moreover, the very nature of cybersecurity is evolving. As attackers cultivate their methods for evasion—employing social engineering, phishing, and other tactics that exploit human behavior—human oversight grows increasingly vital. A well-trained human can recognize the subtleties of an impending threat that an automated system might overlook.

Striking the Right Balance

Finding the right balance between human oversight and automation is not a matter of choosing one over the other; instead, it involves a strategic integration of both elements. Organizations should consider a hybrid approach, where automation handles repetitive tasks and data analysis while human experts oversee critical decision-making and strategic planning.

-

Layered Security Ecosystem: Organizations can develop a layered security architecture combining automated detection tools with human oversight. For instance, automated systems can flag potential threats, while human analysts assess the severity and determine the appropriate response.

-

Continuous Learning: AI technologies should be designed to learn from human insights. When security professionals review automated decisions, this feedback loop can enhance the systems, reducing biases and improving accuracy over time.

-

Regular Auditing: Conducting regular audits of automated systems can help ensure that they operate as intended and that any potential biases are identified and corrected. This process should involve human experts who can interpret results in the broader organizational context.

-

Training and Education: Regular training sessions for security personnel in AI and automation technologies is essential. Understanding how these systems work will enable human experts to make more informed decisions when intervening in automated processes.

- Establishing Clear Protocols: Organizations should establish clear protocols defining when human intervention is necessary. These protocols can outline specific scenarios that warrant human oversight, ensuring that critical situations are handled appropriately.

Conclusion

The future of AI security will undeniably involve greater reliance on automation. However, as organizations increasingly integrate these technologies, the importance of human oversight must remain at the forefront of discussions. Achieving the right balance will not only enhance security measures but also ensure ethical, responsible, and effective use of AI in protecting our digital landscape. In this evolving environment, organizations must embrace a collaborative approach that leverages the strengths of both automation and human expertise to safeguard against ever-changing threats.

Deixe o seu comentário