The integration of artificial intelligence (AI) into Security Operations, commonly referred to as SecOps, is reshaping how organizations manage risk, respond to threats, and secure sensitive information. While AI presents numerous advantages for enhancing security measures, it also raises important ethical considerations that must be addressed to ensure responsible use. This article explores the ethical implications of deploying AI in SecOps, emphasizing accountability, privacy, fairness, transparency, and the need for human oversight.

1. Accountability

The deployment of AI in SecOps introduces complexities surrounding accountability. When an AI system makes a decision—such as flagging a security threat or triggering an automated response—the question arises: who is responsible for that decision? AI operates based on algorithms trained on historical data, but that data may contain biases, errors, or incomplete information. Organizations must establish clear lines of accountability to ensure that human operators are responsible for significant decisions made by AI systems. This includes creating protocols for auditing AI-driven actions and ensuring that accountability does not shift away from human operators to algorithms.

2. Privacy Concerns

AI systems in SecOps often rely on vast amounts of data to improve threat detection and response capabilities. This data can include sensitive personally identifiable information (PII), communications, and behavioral patterns of employees and customers. The ethical handling of this data is critical. Organizations must implement robust privacy policies that comply with data protection regulations (such as GDPR) and ensure that individuals’ privacy rights are upheld. Techniques such as data anonymization, encryption, and restricted access controls should be employed to mitigate risks related to data collection and processing. Moreover, organizations need to practice transparency with stakeholders about how their data is used and the measures taken to protect their privacy.

3. Fairness and Bias

AI algorithms can unintentionally perpetuate biases present in their training data. In the context of SecOps, this can lead to disproportionate targeting of specific groups or individuals, generating unfair treatment and potentially discriminatory outcomes. For instance, an AI system tasked with monitoring network behavior might misidentify benign activities by certain user groups as suspicious based on biased historical data. To counteract this, organizations must regularly evaluate and audit their AI systems for biases, involve diverse teams in the development of AI algorithms, and establish fair criteria for threat detection and response.

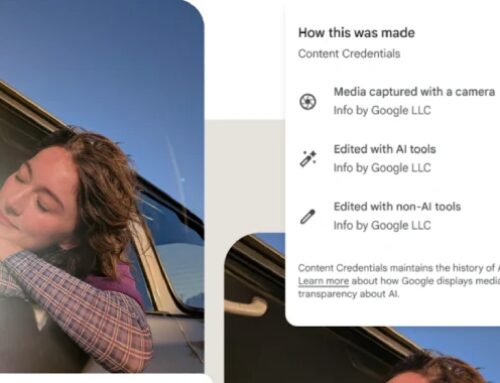

4. Transparency and Explainability

AI systems, particularly those based on complex models like deep learning, can act as "black boxes," making it difficult to understand how decisions are made. This opacity can hinder trust among stakeholders, from employees to regulators. In SecOps, understanding the rationale behind threat detection and response actions is critical, especially when those decisions impact individuals’ privacy and security. Organizations should prioritize transparency by providing explanations for AI-driven decisions, adhering to principles of explainability, and ensuring that stakeholders are informed about how AI contributes to security operations. This can foster trust and improve the overall security culture within the organization.

5. Human Oversight

Despite the capabilities of AI in handling security threats, human oversight remains essential. Automated systems can analyze vast amounts of data and quickly identify anomalies, but they may lack the nuanced understanding of context that human operators possess. Critical decisions, especially those involving potential legal or ethical implications, should involve human judgment. Training and empowering security teams to effectively collaborate with AI systems can lead to more informed decision-making and strengthen the overall security posture of the organization. Additionally, organizations should establish clear guidelines that dictate when human intervention is necessary, particularly in high-stakes scenarios.

6. Continuous Monitoring and Adaptation

As technology evolves, so too do the ethical implications surrounding AI. Organizations must commit to continuous monitoring and adaptation of their AI systems and the ethical frameworks governing them. This involves staying updated with advancements in AI, emerging threats, and shifts in societal expectations regarding privacy and data protection. Regular assessments can help identify vulnerabilities, biases, or ethical concerns that may arise as AI systems acquire new data and functionalities.

Conclusion

The deployment of AI in SecOps presents both opportunities and challenges, requiring careful consideration of ethical implications. By addressing accountability, privacy, fairness, transparency, and the necessity of human oversight, organizations can harness the power of AI while upholding ethical standards and fostering trust among stakeholders. As technology continues to advance, a proactive approach to ethical AI deployment will be essential for ensuring a secure and equitable digital landscape.

![[Webinar] Shadow AI Agents Multiply Fast — Learn How to Detect and Control Them](https://secopsnextgen.com/wp-content/uploads/2025/09/webinar-500x383.jpg)

Deixe o seu comentário